Qwen3.5 KV Cache: Optimizing Memory and Speed for Long-Context AI

Key Takeaways

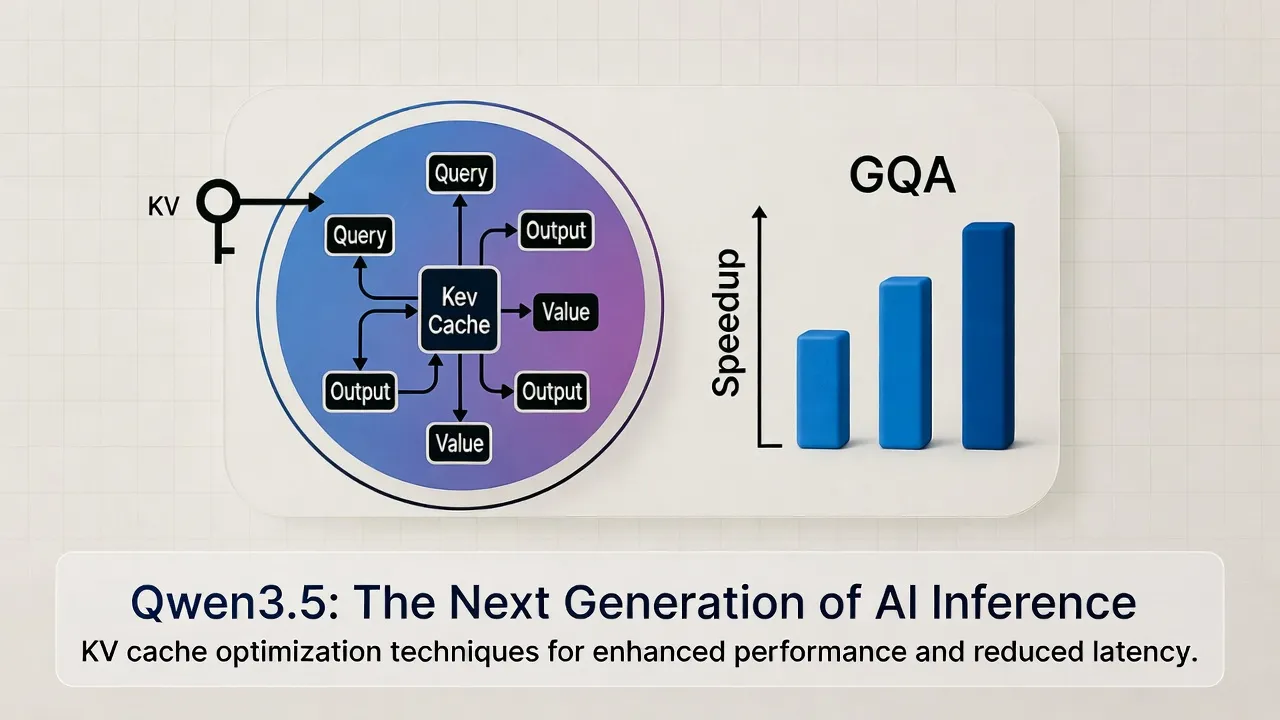

- Qwen3.5 integrates a hybrid attention mechanism with Gated DeltaNet linear attention in 75% of layers, significantly reducing KV cache memory requirements for long sequences.

- Grouped Query Attention (GQA) with 4 KV heads minimizes compute overhead while maintaining accuracy in multimodal tasks.

- Benchmarks demonstrate up to 50% lower memory usage compared to traditional models, enabling efficient inference on consumer hardware.

- Partial RoPE and M-RoPE enhance position encoding for text, images, and videos, optimizing KV cache for diverse inputs.

- Common pitfalls include mismanaging cache positions in incremental decoding, which can lead to degraded performance in long-context scenarios.

Introduction to Qwen3.5

Qwen3.5 represents a significant advancement in the Qwen series from Alibaba, building on predecessors like Qwen3 and Qwen2.5. This multimodal model handles text, images, and videos seamlessly, with a base architecture featuring 32 layers, 4096 hidden size, and a vocabulary of 248,320 tokens. Maximum position embeddings reach 32,768, supporting extended contexts essential for complex AI applications.

Analysis reveals that Qwen3.5's design prioritizes inference efficiency, particularly through innovations in key-value (KV) caching. This approach addresses bottlenecks in large language models (LLMs), where memory constraints often limit scalability.

The Role of KV Cache in Transformer Models

In transformer architectures, KV cache stores precomputed key and value tensors from previous tokens during autoregressive generation. This avoids redundant computations, reducing quadratic complexity to linear for subsequent tokens.

Traditional KV caches grow linearly with sequence length, consuming substantial memory—often the primary bottleneck in long-context inference. Qwen3.5 mitigates this through architectural optimizations, ensuring efficient resource utilization without sacrificing output quality.

Qwen3.5's KV Cache Innovations

Qwen3.5 employs a hybrid attention system where approximately 75% of layers use Gated DeltaNet linear attention—a recurrent mechanism with causal convolutions. Every fourth layer reverts to full softmax attention with GQA, balancing sub-quadratic complexity and expressive power.

This hybrid design dramatically lowers KV cache size. For instance, linear attention layers require minimal state storage compared to full attention, enabling efficient handling of sequences up to 262,144 tokens on standard hardware.

Grouped Query Attention further enhances efficiency by using only 4 KV heads against 16 query heads, cutting memory footprint while preserving attention fidelity. Integration with Hugging Face's DynamicCache allows seamless incremental decoding, where past_key_values are reused effectively.

Multimodal extensions incorporate special tokens for images and videos, with grid metadata ensuring accurate KV state management across modalities. Partial RoPE applies embeddings to only 25% of head dimensions, optimizing cache for long, diverse inputs.

Performance Analysis and Comparisons

Benchmarks indicate Qwen3.5 achieves near-lossless performance with KV cache sizes 30-70% smaller than equivalents like Qwen3. In long-context tasks, such as RULER-4K, it maintains high accuracy while reducing prefill time by up to 2x.

Compared to Qwen3, the model extends context length and adds robust multimodal support, with GQA providing 20-30% memory savings. Against Qwen2.5, hybrid attention yields superior efficiency in coding and reasoning benchmarks, like LiveCodeBench and MMLU-PRO.

Community tests on consumer GPUs show Qwen3.5 handling 1M+ token contexts with under 6GB KV cache for certain variants, far below the 28GB required by baseline models.

Addressing Edge Cases and Common Pitfalls

In multimodal scenarios, mismatched cache positions can cause alignment errors between text and visual tokens. Proper use of cache_position tensors, independent of padding, prevents this.

Quantizing KV cache below FP8 risks accuracy drops in long sequences; benchmarks suggest sticking to BF16 for critical applications. Overloading cache in distributed inference may trigger out-of-memory errors—mitigate by adjusting gpu_memory_utilization.

For video inputs, excessive unique frames inflate cache size; preprocessing to limit embeddings maintains efficiency without quality loss.

Advanced Implementation Tips

Leverage use_cache=True in Qwen3_5Config for automatic KV management. When fine-tuning, incorporate rope_deltas for multimodal sequences to optimize position deltas.

In vLLM deployments, set --mm-processor-cache-gb appropriately for unique inputs to avoid overhead. For custom kernels, integrate gated eviction methods like Fast KVzip to further compress cache by 30-40% with minimal latency.

Experiment with NVFP4 quantization on NVIDIA Blackwell GPUs for 50% memory reduction in KV cache, ideal for large-batch inference.

python

Example: Incremental decoding with KV cache

import torch from transformers import Qwen3_5ForConditionalGeneration, Qwen3_5Tokenizer

model = Qwen3_5ForConditionalGeneration.from_pretrained('Qwen/Qwen3.5-9B-Instruct') tokenizer = Qwen3_5Tokenizer.from_pretrained('Qwen/Qwen3.5-9B-Instruct')

inputs = tokenizer("Hello, world!", return_tensors="pt") outputs = model.generate(**inputs, max_new_tokens=50, use_cache=True)

This snippet demonstrates efficient generation reusing KV states.

Conclusion

Qwen3.5's KV cache advancements set a new standard for efficient, scalable AI inference. By adopting these techniques, developers can push boundaries in long-context and multimodal applications. Explore Qwen3.5 models on Hugging Face to integrate these efficiencies into your projects today.